How AvaSure Builds AI for Patient Safety

Patient Safety, Technology

January 9, 2026

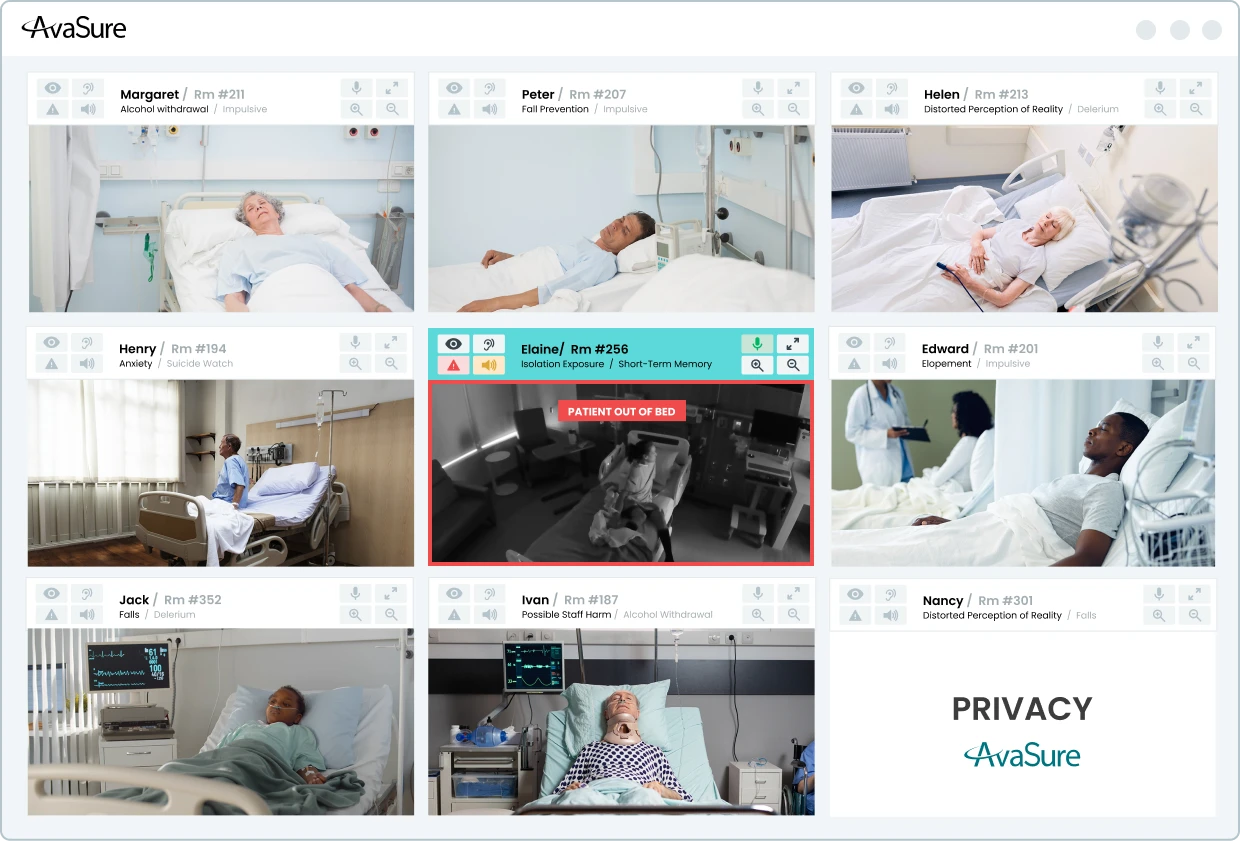

Hospitals are under pressure to reduce preventable harm from falls, elopement, and other adverse events while maintaining a sustainable workload for clinicians. Camera-based monitoring and virtual sitting programs such as AvaSure’s Continuous Observation platform have already demonstrated that continuous observation can reduce falls and injuries, but human-only monitoring does not scale indefinitely. Many organizations are now exploring Artificial Intelligence to extend the reach of their teams, and to detect risk earlier than a human observer might be able to do consistently.

At AvaSure, we view Artificial Intelligence as an extension of the virtual care platform that more than 1,200 hospitals already use for continuous observation, virtual nursing, and specialty consults. Our goal is not to replace human judgement. Instead, we want to build behavior-aware monitoring that can recognize patterns associated with risk, surface those patterns to caregivers in time to intervene, and do so in a way that is technically sound, clinically grounded, and respectful of patient privacy.

This blog describes the design principles behind our Falls and Elopement Artificial Intelligence system. AvaSure leverages Computer Vision, a subset of Artificial Intelligence, to detect high-risk scenarios before an adverse event occurs. Our Computer Vision models perceive the hospital room environment by learning what situations are unsafe for patients. This allows us to demonstrate the clinical performance of our models made possible by our onboarding process for new hospitals. Built on Oracle Cloud Infrastructure (OCI), this cloud-based system provides a scalable foundation that extends beyond fall and elopement prevention into broader ambient AI applications.

What are the Challenges of Computer Vision Models for Falls and Elopement?

Falls and elopements rarely occur as single, isolated moments. They emerge over a sequence of behaviors. A patient may shift position in bed, sit upright, move to the edge of the bed, stand, and then begin to move away. However, there are challenges to building Computer Vision models that understand such behavior. Staff and visitors come and go, sometimes obstructing the view of the camera. Lighting changes over the course of the day and night, including the use of infrared lighting in low light situations. All these challenges are part of the design space, and a monitoring system that considers a single video frame at a time without regard to such confounding elements can miss much of this context.

An important way to adapt to these challenges is to select the right type of camera device. Choosing the right device for AI for patient safety also impacts how the system perceives the hospital room environment. AvaSure offers a variety of camera devices including Guardian Dual Flex, Guardian Mobile Devices, and Guardian Ceiling Devices. Guardian Dual Flex devices provide a fixed camera dedicated to Artificial Intelligence monitoring. Mobile units introduce variation in pan, tilt, zoom, and location within the room – each of which varies in layout across and within different hospital systems. Guardian Ceiling devices provide a different perspective compared to Dual Flex and Mobile devices.

AvaSure’s Computer Vision system and onboarding processes are built to adapt rather than assuming a single, fixed installation environment. Our current models for Falls and Elopement focus on understanding posture and presence over time while accommodating variations in lighting and environment. The system distinguishes the posture of the patient from lying in bed, sitting on the side of the bed, or standing. These states are evaluated over short time windows and combined with rules that relate them to risk. For example, a transition from lying to sitting on the side of the bed may be treated as an early warning, whereas a transition to standing unassisted may prompt a higher-severity alert.

How Does Falls and Elopement AI Perceive the Patient Room?

The Falls and Elopement models employ a three-layer approach to perceive conditions within the hospital room.

- Lowest Layer: Detect whether there are people in the frame and estimate how many.

- Middle Layer: When there is a single person in view, form an understanding of posture and location relative to the bed and other furniture.

- Top Layer: Combine these posture estimates over time and apply rules that map temporal patterns to alerts.

This layered approach is intentional. Computer vision research has shown that models built only around pose estimation can struggle with common conditions in clinical rooms, such as occlusions from blankets and equipment, low light, and cluttered backgrounds. By combining person detection with semantic posture classification and temporal reasoning, we maintain flexibility in camera hardware while capturing clinically meaningful patterns in the room.

The temporal aspect is central to how the system works. Rather than categorizing each frame in isolation, the models consider short windows of behavior and pay attention to transitions. A single frame showing a patient near the edge of the bed may not be sufficient to decide whether they are attempting to stand or simply shifting position. A sequence of frames that show a consistent movement from reclined to upright to standing is more informative. Alerts are based on this kind of sequence-aware understanding rather than a momentary snapshot.

AvaSure designs for known sources of variability. Mobile cameras introduce changes in viewpoint and zoom as they are repositioned. Different rooms may be arranged in mirror images, with beds and bathrooms on opposite sides. Lighting can range from bright daytime scenes to low-light conditions at night. During model development and onboarding, we deliberately include these variations so that the system can learn to interpret similar behaviors across a range of visual conditions.

How does AI for Patient Safety Learn Real-World Clinical Complexity?

Computer Vision models learn by being fed many examples of different situations. For example, these could be labeled as “a patient lying in bed” or “a patient standing near the side of the bed”. The learning (or training) process then iteratively adjusts the model parameters based on how well the model at that iteration correctly predicts the situation associated with a given example. This process repeats until the model performs well enough. There are several methods for capturing data for training, including having actors stage scenes and having computers generate synthetic scenes by rendering life-like situations.

However, models trained only on staged scenes and synthetic data tend to perform best on those same controlled scenarios. Real hospital rooms are more complex. Patients vary widely in demographic, mobility, and behavior. Equipment is added and removed. Staff and visitors move through the field of view in unpredictable ways. To build models of AI for patient safety that can handle this complexity, we need to learn from images that reflect it. At the same time, patient identity and privacy must be preserved.

AvaSure maintains a patent-pending patient anonymization system that allows us to incorporate real-world imagery into training and evaluation without retaining identifiable visual information. The system applies transformations that remove or obscure personally identifiable features and present them to a human reviewer. The reviewer confirms that anonymization is complete and assigns labels describing the posture and relevant contextual details. Only after this confirmation do the frames enter curated data sets used for training and for measuring performance in production.

The system captures frames concentrated around ambiguous or clinically relevant situations rather than random samples of uneventful periods. This makes them particularly useful for improving model performance for video cases where decisions are hardest.

Precision vs Recall: Which Metrics Matter Most for Clinical Success?

When evaluating models in safety-critical domains, accuracy alone is not sufficient. Falls and elopements are relatively rare events compared with the number of hours of observation across a hospital. A system can achieve high overall accuracy by correctly labelling long periods of low-risk behavior yet still miss important events or generate more alerts than staff can reasonably handle.

For this reason, AvaSure frames performance in terms that reflect the realities of clinical operations. Precision captures how often an alert corresponds to a meaningful event. Recall captures how often the system detects an event when it occurs. The F1 score combines the two into a single measure that balances false positives and missed detections. These metrics tell us how often the system asks for attention when it is truly warranted and how often it remains silent when it should speak up.

In practice, different hospitals and units may prefer different trade-offs. A neurosurgical ward may choose to tolerate more alerts in exchange for fewer missed events, whereas a lower-acuity unit may prioritize reducing unnecessary interruptions. Our models can operate at different points along the precision-recall curve, and part of the onboarding process is to discuss and tune that operating point together with clinical and operational leaders.

Beyond the initial deployment, AvaSure treats performance as something that must be monitored and maintained. As room layouts, staffing patterns, and patient populations change, the distribution of behaviors the system sees will change as well. By sampling outputs in the field for new models running side by side with existing models, we can compare new model versions against established baselines and roll back changes that do not meet defined criteria.

Deployment Without Disruption: What is the Process for Onboarding New Hospitals with AI for Patient Safety?

For hospitals, the most important questions are how the system will behave in their specific environment and how disruptive deployment will be. AvaSure’s onboarding process is designed to answer those questions incrementally and transparently.

The work begins with understanding room configurations, typical camera locations, and the kinds of patients and use cases each unit expects to monitor. This can include having AvaSure team members stage representative scenarios in sample rooms, capturing video that reflects local layouts, lighting, and camera angles. This staged data helps verify that the baseline model behaves as expected before any live patient feeds are involved.

As cameras are connected, we run the models in background mode. The system processes live video, but alerts are not yet sent to staff. During this period, we collect anonymized frames of interest and review the patterns of potential alerts. This is also when we fine-tune the operating point where we can adjust the precision vs recall for the unit’s needs.

Once the hospital is comfortable with the system’s behavior, alerts are enabled for virtual safety attendants. The user interface will increasingly support structured feedback so that attendants can indicate whether an alert was helpful, spurious, or associated with an event the system should have recognized. These feedback signals, together with anonymized frames, feed back into our data and model improvement process. By gathering room dimensions, lighting, and arrangement details, we are able to use rendered scenes that are specific to each environment, streamlining the creation of training examples for new hospitals.

How to Extend Beyond AI for Patient Safety Monitoring

Falls and elopements are a natural starting point for behavior-aware monitoring because they are common, clinically important, and directly connected to existing continuous observation workflows. However, the same sensing and inference capabilities can support a broader set of safety and quality use cases over time.

AvaSure’s AI Augmented Monitoring strategy anticipates an expansion from Falls and Elopement into additional use cases such as hospital-acquired pressure injury prevention, infection-related behaviors, and staff duress. Environmental sensing capabilities, including detection of meal tray delivery and removal or patterns of in-bed movement, can contribute to these use cases by providing objective, continuous signals about patient status and care processes. Each new application will require its own feasibility studies, data collection plans, and validation steps, but they build on the same underlying platform and design approach.

Each of these additional use cases requires enhancements to the Computer Vision models to have them comprehend a wider variety of situations. Such enhancements can require additional or more complex models requiring additional computing power. AvaSure leverages OCI’s AI infrastructure offerings to bring to bear considerable GPU-powered computing to support an expanding range of use cases.

How do we integrate security and compliance into the design of healthcare AI models?

Security for us is not a separate track from Artificial Intelligence; it is part of the design of the platform and the models from the beginning. AvaSure’s virtual care systems already operate in environments where SOC 2 and HIPAA expectations are the baseline, not an add-on, and the same standard applies to AI Augmented Monitoring. Every new service that touches patient data, from model pipelines to anonymization computing, is expected to pass formal design review, threat modelling, and, where appropriate, penetration testing before it is considered ready for production.

At the infrastructure level, our cloud strategy is built on a scalable, multi-tenant architecture designed to keep different users and services securely separated. Robust identity and access management ensures that only authorized components can communicate or access sensitive data, and every service operates with the minimum permissions required. Data moving through the system is protected by encryption, as is data stored in managed services. Comprehensive audit logging is a core part of our approach, recording authentication and authorization events, configuration updates, model changes, and administrative actions so that security and compliance teams can thoroughly review activity if needed.

For AI specifically, the same security-by-design approach applies. Security specialists review designs for new AI use cases during ideation rather than waiting for prototypes. The review looks at how video streams enter the system, where inference is performed, what outputs persisted, and how PHI is handled or removed. This helps ensure that the introduction of GPU-backed inference or new data flows does not inadvertently expand the attack surface or weaken isolation guarantees.

The anonymization pipeline is an example of security and privacy concerns shaping the technical design. Rather than storing raw patient video, the system extracts short windows around events of interest and routes them to a separate anonymization service. That service applies privacy preserving transforms and requires human confirmation that identifiable information has been removed before frames can be used for training or evaluation. All of this traffic is encrypted in transit; anonymized images are encrypted at rest and stored with restricted access. This architecture allows the models to benefit from realistic data while maintaining clear boundaries around PHI.

In practice, ensuring security involves closely connecting monitoring activities with incident response protocols. A comprehensive strategy includes full observability across systems and processes, using tools like metrics, alerts, dashboards, and health checks to quickly detect and respond to any unusual activity. The same mechanisms that support autoscaling and automated rollback for availability also support security; if a change in configuration or dependency were to introduce unexpected behavior, operators can detect it quickly and revert. Regular risk assessments, combined with continuous integration and deployment practices, are intended to keep the platform aligned with evolving threats and regulatory expectations rather than treating compliance as a static checklist.

From the hospital’s perspective, the outcome of this approach should be straightforward: AI features sit inside a platform that is already held to enterprise security and compliance standards, and any new capability is expected to meet those standards before it is offered in production. The same controls that protect virtual care today – access control, encryption, audit logging, and formal review – apply equally to behavior-aware monitoring and future AI use cases.

How Does AvaSure Scale AI for Patient Safety in Modern Health Systems?

Building AI for patient safety is not simply a matter of choosing a model architecture or training on a large data set. It is a system-level effort that spans model design, data collection, anonymization, infrastructure, onboarding, monitoring, security, and governance. Each part influences how the technology behaves in practice and how much clinicians and patients can rely on it.

For AvaSure, the core elements of that system are clear. We focus on understanding behavior in context rather than isolated frames. We adopt a stepwise development approach that involves staged experiments, demonstrations, and validation in real clinical settings. We learn from real rooms through an anonymization data collection system that protects identity while concentrating on data where it matters most. We operate on a cloud platform designed for reliability, scalability, and security. Lastly, we treat hospitals as partners in an ongoing improvement process rather than one-time installations.

AvaSure is building AI for patient safety into the virtual care platform that customers already use for continuous observation and virtual nursing. Future blogs will explore specific components in more depth, including anonymization and data curation, our hybrid edge–cloud roadmap, and the evolution from single-use models to a suite of AI augmented monitoring applications. For now, our aim is to make the underlying approach visible so that hospital leaders and clinicians can make informed decisions about how AI fits into their own patient safety strategies.

Ready to get started?

Get in touch with an AvaSure representative to learn more about AvaSure's AI-enabled virtual care solutions.